I’m known for liking to have a good old moan about things and I’m also known for being a bit old fashioned in my ways and values despite my age. I don’t normally get involved in talking about that part of me on my blog as I like to keep it technical here but when something overlaps into technology, it’s hard not to get it out there.

When I was growing up, we had friends and friends were people who you went out with and socialised together, people who you’d call on the phone to see how they were doing or how their life was going. Now, in the year 2014, what on earth has happened to the concept of friends? Did the old definition get completely unwritten and nobody told me? I checked the Oxford dictionary and the definition for the noun friend reads as follows:

A person with whom one has a bond of mutual affection, typically one exclusive of sexual or family relations

The synonyms show you that a friend is someone close to you with words like intimate, confidante, soul mate and brother or sister given and Oxford tells us that the origins of the word friend are Germanic and the meaning ‘to love’.

Just this weekend, I met someone at a party and I spent no more than fifteen minutes sum total time talking to said individual. I didn’t dislike him at all so there’s no problems there, but does meeting a stranger at a party and spending net fifteen minutes with them really constitute a friendship these days and how does that effect the things that we should be holding closest and dearest to us?

On Facebook right now, I have 60 friends. All of these people are either family or friends who are actually people that I am some-which-way interested in hearing from or actually care to read what they have to say (although I do wish sometimes that I could unfriend some people for the amount of share this and look at rubbish they post).

I did a straw poll on Twitter earlier today and granted, my follower base isn’t particular large and those who do follow me are going to be biased to me in a like minded sense, but both of the people who responded said the same thing: they only friend with people on Facebook who they actually know so why are a lot of people out there so willing to throw friend invitations on Facebook around like sweets and confetti? Surely a friendship on Facebook should be something reserved for the people who you actually hold in that esteem? Not only does having a mammoth collection of friends clutter your News Feed with information and status updates that you largely are going to ignore and not care about, but you are also exposing yourself to people who you don’t really know. Not that I am trying to victimise her in this post, but my wife has currently, 320 friends on Facebook and whilst she definitely has a wider circle of friends and people she interacts with more people than me, is it really five times greater than mine or is she collecting friends for the sake of it (bearing in mind here that she accepted the friend request from the same person I received an invitation from at the weekend)?

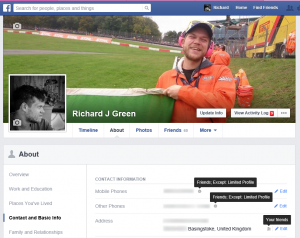

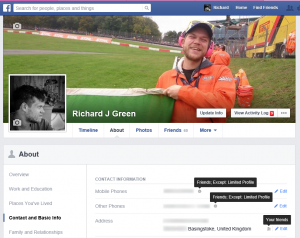

I took a couple of screenshots of my Contact Info page from my Facebook profile earlier today and overlayed them on top of each other so that I can show the whole scene in one picture. As you can see from the picture, my contact information shared with friends and this includes my mobile and home phone number, my home address and although not shown (as it’s further down the page beyond the fold) my email address is also shared with friends.

I know that the protagonist amongst you will say that you can customise this and change who can see your information but that then brings its own questions. Firstly, who actually thinks about what that person might be able to see before accepting the friend request in that the decision to accept or decline for most has probably become a reflex action and secondly, what are the privacy options if you wanted to limit that persons access to your information? I took a look at the privacy options for my phone number and the choices are Friends of Friends, Friends, Only Me or Specific People.

Friends of Friends is just utter lunacy. Why would I want to share my phone number with the friends of my friends when I have no control over who they friend in turn? Friends Only is a logical option and Only Me defeats the purpose of adding the information to your profile in the first place. Specific People is the ideal option if you are a bit of a friend collector or very privacy conscious but who really is going to remember to after accepting that friend request, go and edit the list of people who are allowed or denied to see your information? What’s more, I highly suspect that this isn’t a setting which you can edit from the mobile applications which makes it hard to administer the value too.

Contact information and information about where you live, your email address and other personal data nuggets are important pieces of personal information, Personally Identifiable Information (PII) as the world has come to know it and this information should be protected at all costs, not made available to somebody at the acceptance of a friend request. If the Facebook account of somebody in your friends list was hacked, then your information could become part of the next wave of phishing scam or telephone nuisance.

Aside from the PII though, there is the day to day aspect of do you actually want to see what said individual is posting status messages about or do you want to know what they liked and shared and the answer is most likely probably not, especially if you are already dealing with a high volume of News Feed clutter already. The side of this issue is more personal and the response will vary from person to person according to how much of their lives they want to publicise, but do I want people who I only know in the most lose of senses to know what I am doing and do I want my status updates appearing in their News Feed? If I post a message that I’m having a great day out with my kids because I want to share the fact that I’m having a great time, enjoying a day with my family, how do I know that I only met for fifteen minutes isn’t a professional crook and now armed with knowledge that I am out for the day with my kids and my home address, isn’t going to come and burgle my house for all my prized, hard earned possessions and the blunt answer is that you don’t know these things because the people you friend on Facebook, you probably don’t know enough about them to make that judgement call.

For all my rambling in this post, the crux of the issue for me is that the definition of friends seems to have negatively evolved as social media has made people far more accessible to other people. I think that it is a good thing is many respects as it allows us to connect with people that they care most about in ways that they couldn’t have done previously and people in this category are truly the real friends in life. On the other side though, I also think that there is a high degree of over-sharing that goes on and people, people are making their lives too publicly accessible for the consumption of those that they barely know at all and they aren’t considering the implications of clicking that little blue accept button before they do it which not only means each time you look at Facebook you have to wade through the endless scrolling page of tripe to reach the good stuff and consequentially wasting your own time, you are also exposing yourself and your information to people. If I wouldn’t give somebody I met at a party my phone number, why would I connect with them as a friend on Facebook because they are tantamount to the same thing.