Cleaning Up Active Directory and Cluster Computer Accounts

Recently at work, I’ve been looking at doing a clean up of our Active Directory domain and namely removing stale user and computer accounts. To do this, I short but sweet PowerShell script which gets all of the computer objects from the domain and include the LastLogonTimestamp and the pwdLastSet attributes to show when the computer account was last active however I came across an interesting problem with cluster computer objects.

Import-Module ActiveDirectory

Get-ADComputer -Filter * -SearchBase “DC=domain,DC=com” -Properties Name, LastLogonTimestamp, pwdLastSet -ResultPageSize 0 | Select Name, @{n='LastLogonTimestamp';e={[DateTime]::FromFileTime($_.LastLogonTimestamp)}}, @{n='pwdLastSet';e={[DateTime]::FromFileTime($_.pwdLastSet)}}, DistinguishedName

When reviewing the results, it seemed as though Network Names for Cluster Resource Groups weren’t updating their LastLogonTimestamp or pwdLastSet attributes even though those Network Names are still in use.

After a bit of a search online, I found a TechNet Blog post at http://blogs.technet.com/b/askds/archive/2011/08/23/cluster-and-stale-computer-accounts.aspx which describes exactly that situation. The LastLogonTimestamp attribute is only updated when the Network Name is brought online so if you’ve got a rock solid environment and your clusters don’t failover or come crashing down too often, this object will appear as although it’s stale.

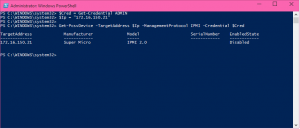

To save you reading the article, I’ve produced two updated versions of the script. This first amendment simply adds the servicePrincipalName column to the result set so that you can verify them for yourself.

Import-Module ActiveDirectory

Get-ADComputer -Filter * -SearchBase “DC=domain,DC=com” -Properties Name, LastLogonTimestamp, pwdLastSet, servicePrincipalName -ResultPageSize 0 | Select Name, @{n='LastLogonTimestamp';e={[DateTime]::FromFileTime($_.LastLogonTimestamp)}}, @{n='pwdLastSet';e={[DateTime]::FromFileTime($_.pwdLastSet)}}, servicePrincipalName, DistinguishedName

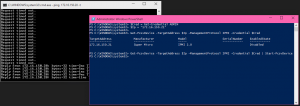

This second amended version uses the -Filter parameter of the Get-ADComputer Cmdlet to filter out any results that include the MSClusterVirtualServer which designates it as a cluster object computer account.

Import-Module ActiveDirectory

Get-ADComputer -Filter 'servicePrincipalName -NotLike "*MSClusterVirtualServer*"' -SearchBase “DC=domain,DC=com” -Properties Name, LastLogonTimestamp, pwdLastSet, servicePrincipalName -ResultPageSize 0 | Select Name, @{n='LastLogonTimestamp';e={[DateTime]::FromFileTime($_.LastLogonTimestamp)}}, @{n='pwdLastSet';e={[DateTime]::FromFileTime($_.pwdLastSet)}}, DistinguishedName

The result set generated by this second amendment of the script will produce exactly the same output as the original script with the notable exception that the cluster objects are automatically filtered out of the results. This just leaves you to ensuring that when you are retiring clusters from your environment that you perform the relevant clean up afterwards to delete the account. Alternatively, you could use some clever automation script like Orchestrator to manage the decommissioning of your clusters and include this as an action for you.