Project Home Lab: Open Server Surgery

So with my recent bought of activity on the home lab project front, evident from my previous posts Project Home Lab: Server Build and Project Home Lab: Servers Built, I’ve forged ahead and got some of the really challenging an blocking bits of the project done over Christmas. I eluded to what I needed to do next in the post Project Home Lab: Servers Built. All of this work paves the way for me to get the project completed in good order, hopefully all during January, at long last.

In this post I’m just going to gloss over some of the details about the home server move that I completed over the weekend. Lots more hours of thinking, note taking and planning were involved in this and most likely more than should have gone into it but I don’t like failure so I like to make sure I have all the bases covered and all the boxes ticked. Most critically, I had to arrange an outage window and downtime with the wife for this to happen.

Out with the Old

The now previous incarnation of my Windows Server 2012 R2 Essentials home server lived in a 4U rack mount chassis. As it was the only server I possessed at the time, I never bothered with rack mount rails so problem one was in the fact that the server was just resting atop of my UPS in the bottom of the rack.

Problem two and luckily, something which has never bitten me previously but has long bothered me is that the server ran on desktop parts inside a server chassis. As a result, it had no IPMI interface for out of band management so that if something should go wrong with the Windows install or some warning in the BIOS, I can remotely access the keyboard, video and mouse (a KVM no less). It had an Intel Core i5 3740T processor with an ASUS ATX motherboard and unregistered unbuffered memory with a desktop power supply albeit it a high quality Corsair one. All good quality hardware but not optimal for a server build.

The biggest problem however was with the fact that the 4U chassis, a previous purchase from X-Case a couple of years ago sat at 4U tall but only had capacity for ten external disks. I had two 2.5″ SSDs for the operating system mounted internally in one of the 5.25″ bays in a dual 2.5″ drive adapter in addition to the external drives. It all worked nicely but it wasn’t ideal as my storage needs are growing and I only had two free slots. Although not a problem, the hot swap drive bays were added to the chassis with an aftermarket upgrade from X-Case didn’t use SAS Multilane SFF-8087 cables but instead used SATA connections which meant from my LSI 9280-16i4e RAID Controller, I had to use SAS to SATA Reverse Fanout cables which made the whole affair a bit untidy.

None of this is X-Case’s fault let us remember. The case did it’s job very well but my evolving and increasingly demanding needs no longer met the capabilities of the case.

Planning for the New

Because I like their to be order in the force, per my shopping list at Project Home Lab: Shopping List, I bought a new 3U X-Case chassis for my home server at the same time as buying up the lab components and getting the home server set straight is priority one because the 4U chassis is a blocker to me getting any further work done as the 3U and 2U lab servers need to fit in above it. In addition to moving chassis, I’ve given it an overhaul with a new motherboard and CPU to match the hardware in the lab environment. A smaller catalogue of parts means less knowledge required to maintain the environments and means I have an easy way of upgrading or retro-fitting in the future with the single design ethos.

As anyone knows, changing the motherboard, processor and all of the underlying system components in a Windows server is a nightmare potentially in the making so I had to plan well for this.

I had meticulously noted all of the drive configurations from the RAID Controller down to the last detail, I had noted which drives connected to which SATA port on which controller port and I had a full backup of the system state to perform a bare metal recovery if I needed. All of our user data is backed up to Azure so that I can restore it if needed although I didn’t expect any problems with the data drives in honesty, it was the operating system drives I was most concerned about.

In with the New

After getting approval for the service outage from the wife and shutting down the old home server, I got it all disconnected and removed from the rack. I began the painful process of unscrewing all of my eight drives from the old chassis drive caddy’s and the two internal drives and reinstalling them into the new caddy’s using the 2.5″ to 3.5″ adapters from the shopping list. I think I probably spent about 45 minutes carefully screwing and unscrewing drives and at the same time, noting which slot I removed them from and which slot I installed them into.

With all the drives moved over, I moved over the RAID Controller and connected up the SAS Multilane SFF-8087 cables to the connector with the tail end already connected to the storage backplanes in the chassis.

Once finished, I connected up the power and the IPMI network port on the home server which I had already configured with a static IP as the home server is my DHCP Server so it wouldn’t be able to get an automatic lease address. I got connected to the IPMI interface okay and powered the server on using it and quickly flipped over to the Remote Control mode which I have to say, works really nicely even when you consider that it’s Java based.

Up with the New

While I was building the chassis for the home server, I had already done some of the pre-work to minimize the downtime. The BIOS was already upgraded to the latest version along with the on-board SAS2008 controller and the IPMI firmware. I had also already configured all of the BIOS options for AHCI and a few other bits (I’ll give out all of the technicalities of this in another post later).

First things first, the Drive Roaming feature on the LSI controller which I blogged about previously, Moving Drives on an LSI MegaRAID Controller worked perfectly. All 9 of the virtual drives on the controller were detected correctly, the RAID1 Mirror for the OS drives stayed in-tact and I knew that the first major hurdle was a behind me. A problem here would have been the most significant to timely progress.

The boot drive was hit okay from the LSI RAID Controller BIOS and the Windows Server 2012 R2 logo appeared at least showing me that it was starting to do something. It hung here for a couple of minutes and then the words “Getting Devices Ready” appeared. The server hung here for at least another 10 minutes at which point I was starting to get worried. Just when I was thinking about powering it off and moving all the drives back and reverting my changes, a percentage appeared after the words “Getting Devices Ready”, starting at 35% and it quickly soared up to 100% and the server rebooted.

After this reboot, the server booted normally into Windows. It took me about another hour after this to clean-up the server. First I had to reconfigure my network adapter team to include the two on-board Gigabit Ethernet adapters on the Supermicro motherboard as I am no longer using the Intel PCIe adapter from the old chassis. Then, using the set devmgr_show_nonpresent_devices=1 trick, I removed all of the references to and uninstalled the drivers for the old server hardware.

After another reboot or two to make sure everything was working properly and a thorough check of the event logs for any Active Directory, DNS or DHCP errors and a test from my 3G smartphone to make sure that my published website was running okay on the new server, I called it a success. One thing I noted of interested here was that Windows appeared to not require re-activation as I had suspected it would. A motherboard and CPU family change would be considered a major hardware update which normally requires re-activation of the license key but even checking now, it reports activated.

Here’s some Mr. Blurrycam shots of the old 4U chassis after I removed it and the new 3U chassis in the rack.

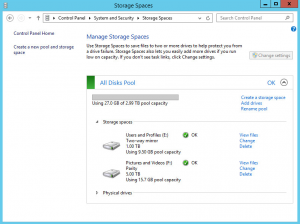

As you can see from the second picture, the bottom 3U chassis is powered up and this is the home server. In disk slots 1 and 5 I have the two Intel 520 Series SSDs which make up the operating system RAID1 Mirror and in the remaining eight populated slots are all 3TB Western Digital Red drives.

Above the home server is the other 3U chassis which will be the Lab Storage Server once all is said and done and at the very bottom I have the APC 1500VA UPS which is quite happy at 20% load running the home server along with my switches, firewall and access points via PoE. I’ll post some proper pictures of the rack once everything is finished.

Behind the scenes, I had to do some cabling in the back of the rack to add a new cable for the home server IPMI interface which I didn’t have before and the existing cables for the home server NIC Team were a bit too tight for my liking caused by the 3U Lab Storage Server above being quite deep and pulling on them slightly. To fix this, I’ve patched up two new cables of longer length and routed them properly in the rack. I’ve got a lot of cables to make soon for the lab (14 no less) and I will be doing some better cable management at the same time as that job. One of the nice touches on the new X-Case RM316 Pro chassis is the front indicators for the network ports, both of which light up and work with the Supermicro on-board Intel Gigabit Ethernet ports. The fanatic in me wishes they were blue LEDs for the network lights to match the power and drive lights but that’s not really important now is it.

More Home Server Changes

The home server has now been running for two days without so much as a hiccup or a cough. I’m keeping an eye on the event logs in Windows and the IPMI alarms and sensor readings in the bedding in period and it all looks really happy.

To say thank you to the home server for playing so nicely during it’s open server surgery, I’ve got three new Western Digital 5TB drives to feed it some extra storage. Two of the existing 3TB drives will be coming out to make up the bulk storage portion of the Lab Storage Server Storage Space and one drive will be an expansion giving me a gross uplift of 9TB capacity in the pool. I would be exchanging the 3TB drives in the home server with larger capacity drives one day in the future anyway so I figured I may as well do two of them early and make good use of the old drives for the lab.

I’m also exploring the options of following the TechNet documentation for transitioning from Windows Server 2012 R2 Essentials to Windows Server 2012 R2 Standard. You still get all of the Essentials features but on a mainline SKU which means less potential issues with software (like System Center Endpoint Protection for example which won’t install on Essentials). On this point I’m waiting for some confirmation of the transition behaviour in a TechNet Forum question I raised at https://social.technet.microsoft.com/Forums/en-US/d888f37a-e9e9-4553-b24c-ebf0b845aaf1/office-365-features-after-windows-server-standard-transition?forum=winserveressentials&prof=required as the TechNet article at http://technet.microsoft.com/en-us/library/jj247582 leaves a little to be desired in terms of information.

I’m debating buying up some Lindy USB Port Blockers (http://www.lindy.co.uk/accessories-c9/security-c388/usb-rj-45-port-blockers-locks-c390/usb-port-blocker-pack-of-4-colour-code-blue-p2324) for the front access USB ports on all the servers so that it won’t be possible for anyone to insert anything in the ports without the unlocking tool to open up the port first. See if you can guess which colour I might buy?

Up Next

Next on my to do list is the re-addressing of the network, breaking out my hacksaw and cabling.

The re-addressing of the network is make room for the new VLANs and associated addressing which I will be adding for the lab and my new addressing schema makes it much easier for me longer term to manage. This is going to be a difficult job much like the job I’ve just finished. I’ve got a bit of planning to finish for this before I can do it so this probably won’t happen now until after new year.

The hacksaw, as drastic as that sounds is for the 2U Hyper-V server which you may notice is not racked in the picture above. For some reason, the sliding rails for the 2U chassis are too long for my rack and with the server installed on the rails and pushed back, it sits about an inch and half proud of the posts which no only means I can’t screw it down in place but I can’t close the rack door. I’m going to be hacking about two inches off the end of the rails so that I can get the server to sit flush in the rack. It’s only a small job but I need to measure twice and cut once as my Dad taught me.

As I mentioned before, I’ve got some 14 cables I need to make and test for the lab and this is something I can be working on in parallel to the other activities so I’m going to be trying to make a start on these soon so that once I have the 2U rails cut to size correctly, I can cable up at the same time.