Automatically Label the OS Drive on New VMs

In my quest for private cloud (and public) nirvana, I’m always looking for ways to automate parts of the first run user experience so that as IT Pros, we can build and deliver services to users which fit the bill right out of the gate. In a previous post from earlier this year, in a post entitled Automatically Assign DVD Drive Letter VMM Private Cloud, I walked you through the process of using a PowerShell script that would run as a GUI Run Once script as part of a VMM initiated virtual machine deployment to set the DVD Drive letter.

Since I posted this article, I’ve made a couple of improvements to the environment that I wanted to share with you all and in this first post, I will cover off how to automatically label and name the OS drive on our newly deployed virtual machines. This process involves applies registry keys. As with my first post, you could achieve the same results with Group Policy, however I like all of my modifications to be applied to the local machine so that if the machine is deployed as a non-domain joined server into a DMZ or if there is an issue with the first time Group Policy gets processed, these settings still get applied but I will cover both methods here. This would also work in a multi-tenant or hosting environment where VMs may not being landing in your own domain or environment.

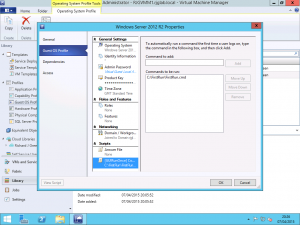

Add the Script to the VM Template

If you followed my previous post, you will be familiar with mounting the .vhd file for the VM Template on another server to modify the local file system. If you are unsure of this, please refer back to my original article Automatically Assign DVD Drive Letter VMM Private Cloud for guidance.

With the .vhd file mounted, we are going to add a new PowerShell script to the FirstRun folder named Set-OSDriveLabel.ps1 and it will contain the following.

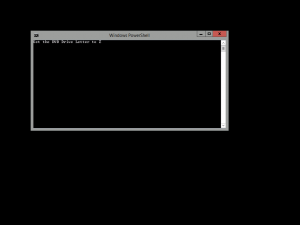

# Set-OSDriveLabel.ps1 # v1.0 2nd June 2015 by Richard J Green # Sets the OS Install Volume Label to the Value in the DriveLabel Variable $DriveLabel = "OS" $OSDrive = $env:SystemDrive $OSDrive = $OSDrive.Substring(0,$OSDrive.Length-1) New-Item -Path HKLM:\SOFTWARE\Wow6432Node\Microsoft\Windows\CurrentVersion\Explorer\DriveIcons -Name $OSDrive -Force New-Item -Path HKLM:\SOFTWARE\Wow6432Node\Microsoft\Windows\CurrentVersion\Explorer\DriveIcons\$OSDrive -Name DefaultLabel -Force Set-Item -Path HKLM:\SOFTWARE\Wow6432Node\Microsoft\Windows\CurrentVersion\Explorer\DriveIcons\$OSDrive\DefaultLabel -Value $DriveLabel -Force

Short and sweet, this script will detect the Windows installation drive from the PowerShell SystemDrive environment variable and set this drive letter to use the label OS as defined in the DriveLabel variable.

One important note here is that this setting is applied to the Wow6432Node on a 64-bit server. If you were applying this to a client OS that was 32-bit then you would need to remove the Wow6432Node portion of the registry key location. I find this a peculiar one given that this change effects Windows Explorer which is a 64-bit process.

With the PowerShell script saved in the FirstRun folder, we need to update the FirstRun.cmd wrapper script that invokes the containerised PowerShell scripts in the appropriate escalated manner. Simply add the following lines to the script before the clean-up section at the end.

:: Launch PowerShell and Label the OS Drive to OS echo Set OS Drive Label to OS PowerShell.exe -NoLogo -Sta -NoProfile -ExecutionPolicy Unrestricted -File %SystemDrive%\FirstRun\Set-OSDriveLabl.ps1

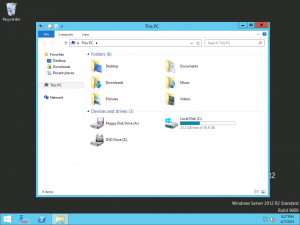

I hope this takes away another manual step from your VM build processes and brings you one step closer to nirvana. In another post coming soon, I will have instructions on how to hide some of the folders from the This PC or “My Computer” folder which don’t really belong on a server and another post to clarify the steps on creating Network Locations for the This PC folder.